MLOps Services

With our MLOps services, we simplify ML model operations. From automated deployment to scalable infrastructure and real-time monitoring, we remove bottlenecks and boost performance—ensuring reliability, faster time to market, and sustained accuracy.

Why Modern ML Needs MLOps?

How MLOps Services Drive Business Value?

Reduced Cost

Our MLOps Service Suite

Facing challenges with your ML pipeline? Wondering time & resources to get it fixed? Get ballpark estimate.

Top Use Cases: Where MLOps Meets Business Values

A multinational tech company implements MLOps for AI-driven cybersecurity, where ML models analyze real-time network traffic, identifying zero-day threats and preventing cyberattacks before they occur.

A global bank uses MLOps to automate regulatory compliance audits using NLP. The system processes large-scale legal documents and flags non-compliance risks in real-time, reducing manual effort by 60%.

An autonomous car manufacturer (like Tesla) applies MLOps to continuously refine object detection and path prediction models, updating them with real-world driving data to improve safety and adaptability in different terrains.

An automotive manufacturer leverages MLOps for sensor-driven predictive maintenance. ML models deployed at the edge predict machinery failures, reducing downtime by 40% and optimizing supply chain efficiency.

Know MLOps as Backbone of Generative AI Application.

Generative AI models are data-hungry, compute-intensive, and ever-evolving, making MLOps essential.

Technologies: The Engine Room

We constantly dig deeper into new technologies and push the boundaries of old technologies, with just one goal - client value realization.

Our Expertise in MLOps Across Leading Cloud Platforms

- Azure DevOps & GitHub for CI/CD

- MLflow & AutoML for tracking

- AKS for secure deployment

- RBAC & Private Link for security

- Data drift monitoring

- SageMaker Pipelines for automation

- SageMaker Model Monitor for monitoring

- Integration with AWS CodePipeline

- Lambda & ECS for Serverless

- Built-in AutoML & distributed training

- Native MLflow support

- Feature Store to reuse ML features

- Scalable Delta Lake-based data pipelines

- CI/CD with GitHub, Jenkins, Azure DevOps

- Automated model governance

Beyond Deployment: Continuous MLOps Support

MLOps isn’t just about deployment—it’s about keeping your models reliable, scalable, and high-performing over time. Our post-delivery support ensures the same.

- We monitor model performance to ensure accuracy and reliability.

- We scale & optimize infrastructure for performance & cost efficiency.

- Security updates & compliance checks keep ML ecosystem protected.

- We maintain and refine ML pipelines to improve efficiency.

The Spirit Behind Engineering Excellence

Frequently Asked Questions (FAQ's)

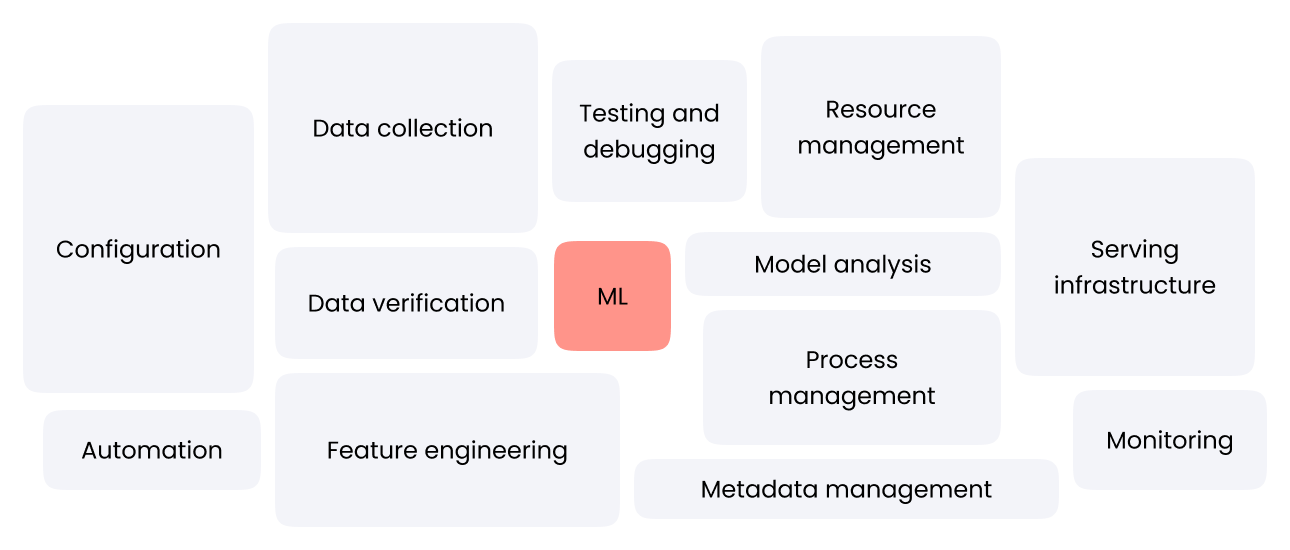

MLOps (Machine Learning Operations) is a set of practices that combines machine learning, DevOps, and data engineering to streamline the lifecycle of ML models. It focuses on automating processes like model training, deployment, monitoring, and management to ensure efficient and scalable AI solutions.

MLOps helps businesses ensure that their AI models are not just accurate but also reliable, scalable, and continuously improving. It minimizes operational bottlenecks, reduces deployment time, enhances collaboration between teams, and ensures compliance with security and governance standards.

While DevOps focuses on software development and deployment, MLOps extends these principles to machine learning models. It involves additional complexities like data management, model retraining, performance monitoring, and handling model drift, which are unique to AI-driven applications.

MLOps reduces time-to-market by automating the ML lifecycle, minimizing errors, and improving model performance through continuous monitoring and retraining. This leads to more reliable AI-driven decisions, reduced operational costs, and a higher return on investment.

Yes, even early-stage AI adoption benefits from MLOps. Implementing MLOps from the beginning ensures scalability, consistency, and efficiency in ML model development, preventing costly rework and inefficiencies in the future.

MLOps integrates governance, security, and compliance frameworks into the AI lifecycle. It ensures data privacy, maintains audit trails for model decisions, enforces access control, and helps meet regulatory requirements like GDPR, HIPAA, or financial regulations.

An MLOps pipeline typically includes data ingestion, data preprocessing, model training, validation, deployment, monitoring, and continuous retraining. Automation and version control are also crucial to ensure repeatability and efficiency.

MLOps incorporates continuous monitoring tools that track data and model performance in real-time. When model accuracy declines due to changing data patterns, automated retraining workflows are triggered to maintain predictive accuracy.

MLOps can be implemented using tools like Kubernetes, Docker, TensorFlow Extended (TFX), MLflow, Kubeflow, Apache Airflow, and cloud platforms like AWS SageMaker, Google Vertex AI, and Azure ML. CI/CD pipelines like GitHub Actions and Jenkins are also used.

MLOps seamlessly integrates with cloud platforms by leveraging managed services for storage, computing, orchestration, and AI model deployment. It enables auto-scaling, cost optimization, and easy collaboration across distributed teams.

Yes, MLOps can be implemented in on-premise environments using containerization (Docker), orchestration (Kubernetes), and CI/CD tools. Hybrid and multi-cloud architectures are also common to balance cost, security, and performance.

MLOps ensures that Generative AI models are trained, deployed, and monitored efficiently while addressing ethical concerns like bias detection, hallucination control, and safe content generation. It enables responsible scaling of AI-driven creativity.

Continuous Integration and Continuous Deployment (CI/CD) pipelines in MLOps automate model training, testing, and deployment. They ensure that models are updated regularly with minimal downtime while maintaining version control and rollback capabilities.

Common challenges include managing large datasets, ensuring data and model versioning, handling model drift, integrating with existing DevOps workflows, and maintaining security and compliance across the AI lifecycle.

Version control

Version control