Generative AI is standing as a game-changing breakthrough.

It’s powerful, it’s fast, and let’s be honest — it’s utterly fascinating!

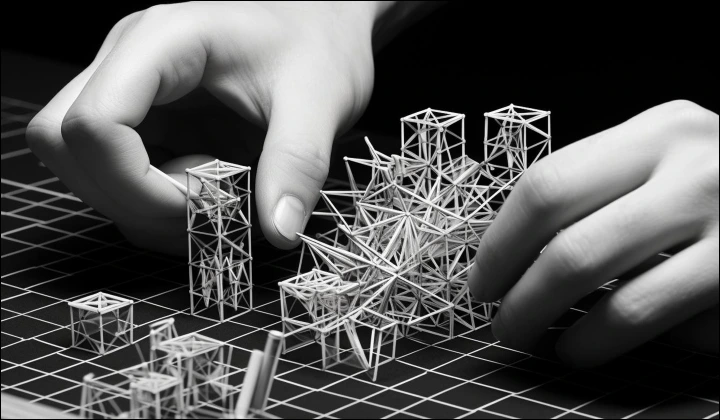

But behind every seemingly magical GenAI output lies a complex web of data challenges that companies are battling every day to make it accurate, fair, and trustworthy.

Tech giants like Google, Amazon, OpenAI, and Tesla are living these challenges and, in many cases, pioneering groundbreaking solutions.

In this article, we’ll explore what challenges does generative AI face with respect to data and how to overcome them effectively.

12 mins

12 mins

Talk to Our

Consultants

Talk to Our

Consultants Chat with

Our Experts

Chat with

Our Experts Write us

an Email

Write us

an Email